How to build and scale enterprise AI agents safely with the right architecture, guardrails, and governance to earn trust at every step.

Every business leader sees the promise of AI agents, but using them safely at scale can seem like a challenge.

Enterprise AI agents don’t just chat, they take meaningful action. For example, they can update customer accounts, route support tickets, or trigger workflows across systems, all while strictly following your company's rules and staying fully transparent.

Giving an AI agent access to your systems can feel risky. How do you make sure it acts securely, that it stays compliant and reliable without overstepping?

This guide shows you how to design, govern and scale enterprise AI agents responsibly. You’ll learn how to build the right architecture, enforce safety guardrails, and implement governance that lets your agents evolve from simple chat assistants to trusted, action-taking teammates.

First, let’s define what we mean by agentic AI.

What is an enterprise AI agent?

An enterprise AI agent is more than just an intelligent chatbot. It’s a secure, action-taking system designed to operate inside your organization’s trusted environment.

These agents do more than just generate responses; they understand a user’s intent, access your data, and execute tasks on your behalf while staying within the strict rules and permissions defined by your organization. That might mean updating a customer’s account, routing a support ticket, or initiating a workflow across multiple systems, all while maintaining full transparency and compliance.

What makes enterprise AI agents unique is how they blend reasoning, action, and governance. They connect to your existing systems through APIs, follow your company’s policies, and provide an auditable record of everything they do.

In other words, they bring automation and intelligence together in a way that’s explainable, measurable, and safe for the enterprise.

How do you choose the right enterprise AI tool?

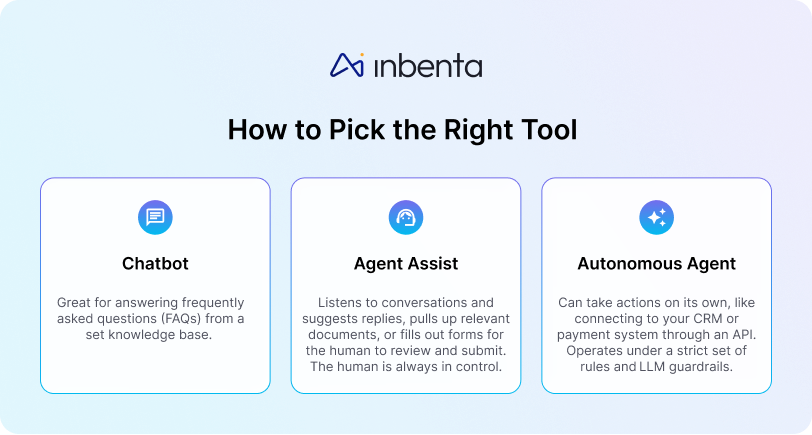

Before building any AI solution, it’s important to clarify your terms. Words like “chatbot,” “agent assist,” and “autonomous agent” are often used interchangeably, but they serve very different purposes.

A chatbot is the most basic conversational tool. It’s designed to answer frequently asked questions from a set knowledge base. It can provide information, but it can’t take action in other systems — think of it as a friendly, searchable manual.

Agent Assist acts as a co-pilot for your human team. It listens to conversations, suggests replies, pulls up relevant documents, even fills out forms for humans to review and submit. But a human stays in control at all times.

Autonomous agents, on the other hand, can take independent actions, such as connecting to a CRM or payment system via an API. These agents operate under a strict set of rules and LLM guardrails to ensure they act safely while performing tasks on their own.

What are some common agentic AI use cases?

Enterprise AI agents are already reshaping how work gets done across teams and departments for many leading businesses.

In customer support (CX), agents can automatically resolve common issues, process returns or refunds, and escalate a task when human judgment is needed.

In IT and HR, they can handle everyday requests like password resets, managing access to systems or data, or providing workflows for onboarding to reduce the backlog and free up staff for more strategic tasks.

In sales and marketing, AI agents can qualify leads, enrich CRM data, and even trigger personalized campaigns based on live customer interactions.

Operations teams use them to automate order tracking, inventory updates, and logistics coordination.

And in finance or compliance, agents can reconcile transactions, validate invoices, or ensure that audit trails are automatically captured and stored — all with enterprise-grade visibility and control.

No matter the department, the impact is the same: less manual work and faster resolutions. By embedding intelligence directly into daily workflows, enterprise AI agents turn disconnected systems and repetitive processes into streamlined, measurable outcomes, all under your control.

Where should you start with agentic AI?

Deciding where to start depends on your business needs and the trust you have in your AI vendor.

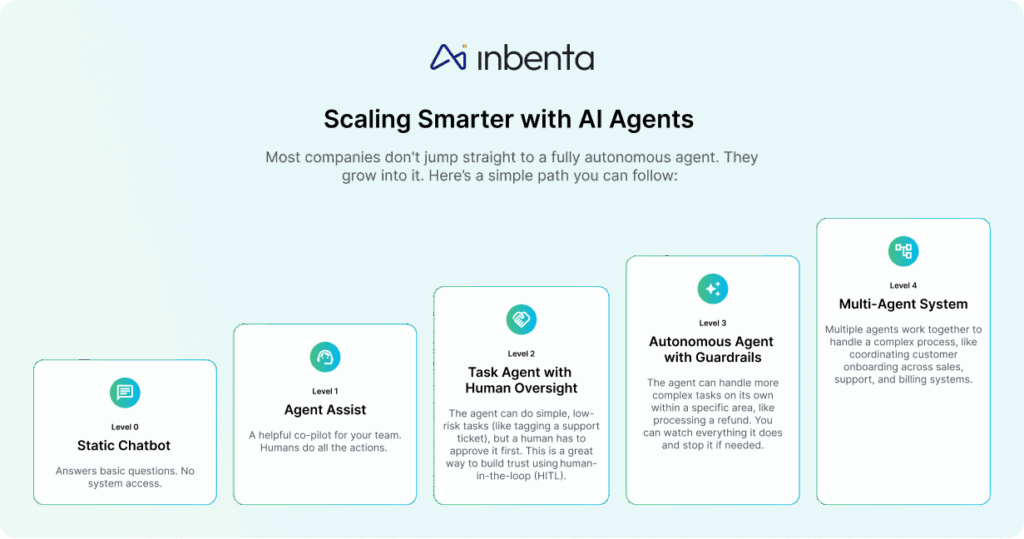

Some businesses evolve through stages — from a static chatbot, to an autonomous agent, all the way to a multi-agent system — that let them build confidence and control.

You can think of it evolving in 5 stages, starting with a chatbot that isn’t quite an agent all the way up to an autonomous agent that supercharges your productivity:

Level 0: Static Chatbot

At this stage, you’re working with a basic conversational tool that can answer common questions from a fixed knowledge base. There’s no system access or real-time context, just predefined answers to predictable prompts. A static chatbot is a great way to test user engagement, gather insights on common questions, and identify where automation could add value next. It’s the foundation for learning how your users will interact with conversational interfaces before layering on intelligence or autonomy.

Level 1: Agent Assist

The next level is empowering your team with AI support. Agent Assist acts as a co-pilot for your human agents. It listens to conversations, suggests relevant responses, surfaces documents, and more. The human stays in control of every action, but the AI takes on the heavy lifting of gathering context and making recommendations. This tool can boost your team’s efficiency and reduce the cognitive load, and it can help your team build trust in AI-generated insights before the agent starts taking action on its own.

Level 2: Task Agent with Human Oversight

Here, your AI agent begins to act (though never alone). It performs simple, low-risk tasks like tagging support tickets, updating CRM fields, or routing requests to the right team, while humans approve or monitor each step. This is where human-in-the-loop (HITL) really shines: you can measure accuracy, monitor behavior, and refine policies in a controlled environment. It’s a safe, low-stakes way to build confidence in automation while proving business value through measurable productivity gains.

Level 3: Autonomous Agent with Guardrails

By this point, your agent has earned its stripes. It can independently complete more complex tasks within defined boundaries. For instance, it can process refunds, check order statuses, or schedule appointments. Guardrails remain firmly in place to prevent overreach: permissions are tightly scoped, logs are immutable, and your team can pause or override the agent at any time. This level delivers tangible ROI as AI begins to reduce manual work at scale, all while maintaining the safety and transparency your organization requires.

Level 4: Multi-Agent System

At the top of the maturity curve, multiple specialized agents work together seamlessly across departments. One might handle onboarding new customers, another might manage billing updates, and a third could track fulfillment, all coordinating through APIs and shared context. This is where enterprise AI becomes an integrated part of your business fabric, orchestrating complex processes across systems and teams. Governance, observability, and performance monitoring keeps these agents aligned, auditable, and continuously improving.

How do you build an enterprise AI agent you can trust?

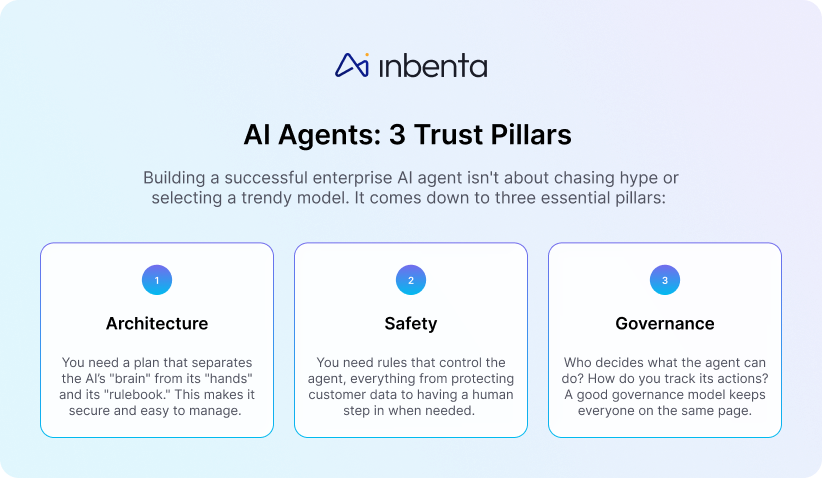

Building a successful enterprise AI agent isn’t about chasing the latest hype or selecting a trendy model. At its core, your trust will rest on three essential pillars: architecture, safety, and governance.

Architecture

A solid architecture separates the AI’s “brain” (its reasoning and decision-making engine) from its “hands” (the tools and APIs it uses to act) and its “rulebook” (the governance and safety policies that control what it’s allowed to do). This separation makes the system both secure and flexible. You can upgrade or replace an LLM without disrupting workflows, or integrate new tools without retraining the model from scratch. You can update your policies centrally and apply them consistently across every agent you create. And if something goes wrong, this modular design limits the risk — you can pause one component, audit it, and replace it without bringing the entire system down.

Safety

Safety isn’t an afterthought. It must be designed into the system from day one. Every layer of the AI agent’s environment needs to have guardrails that define what the AI can see, say, and do. These rules protect sensitive customer data, prevent the agent from accessing unauthorized systems, and ensure that every action it takes aligns with your company’s policies.

Just as important, safety architecture includes human-in-the-loop (HITL) controls, so people can monitor, override, or refine the agent’s behavior in real time. When something unexpected happens — whether it’s a policy breach, a system error, or a confusing query — humans stay firmly in control. This combination of automated safeguards and human oversight gives you the confidence that the agent will act responsibly, even as it scales across different teams and use cases.

Governance

Governance is about who decides what the agent can do, and how every decision, action, and outcome is tracked. A strong governance model sets clear accountability and ensures that the right people are responsible for approving use cases, managing risk, and reviewing the agent’s performance. It also establishes transparency across the organization with dashboards, logs, and reports, so that any stakeholder can see exactly how the agent is performing and where improvements are needed. With governance in place, your security, compliance, and operations teams are all working from the same playbook. This shared visibility keeps innovation moving fast without sacrificing safety, ethics, or trust.

A simple AI agent checklist

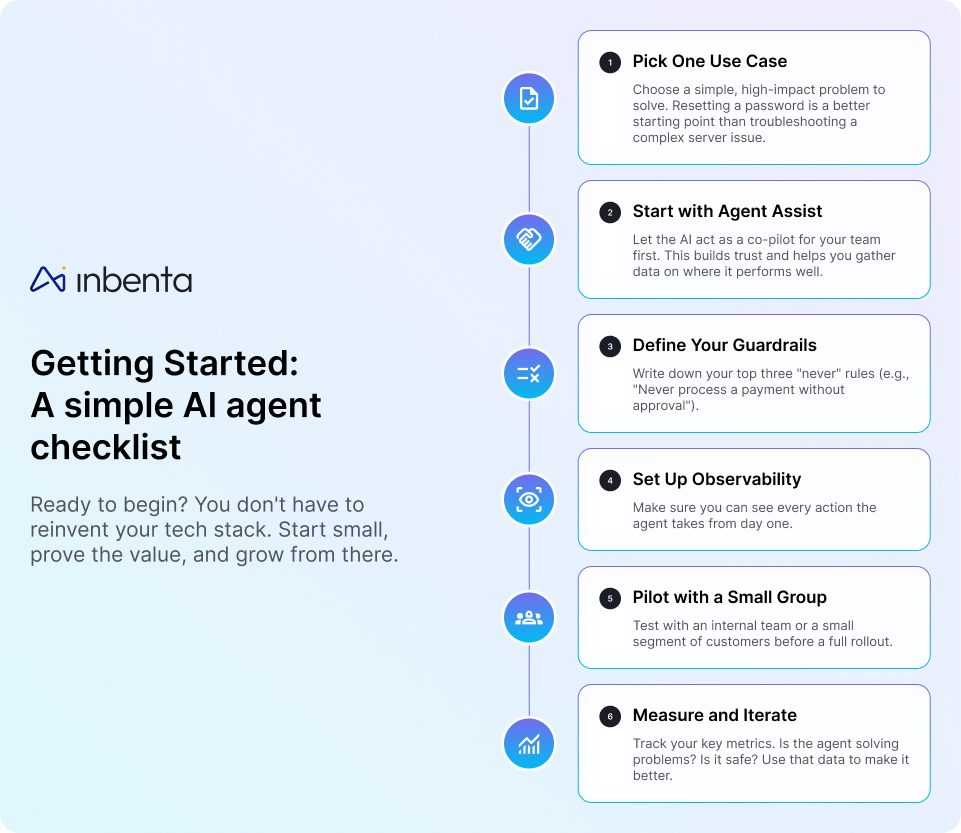

Ready to start your AI agent journey? You don’t need to reinvent your tech stack or launch a massive transformation on day one. The key is to start small, prove the value quickly, and scale with confidence. Here’s a practical checklist to guide you:

1. Pick one use case

Focus on a simple, high-impact problem that’s easy to measure and safe to automate. Instead of tackling complex troubleshooting or decision-heavy workflows, start with a contained process like resetting passwords, routing support tickets, or updating profile data. A well-chosen first use case gives you clear metrics, quick wins, and valuable lessons you can apply to future agent projects.

2. Start with Agent Assist

Before giving the AI control, let it learn alongside your team. In Agent Assist mode, the AI acts as a co-pilot suggesting responses, surfacing knowledge, or drafting actions for human approval. This builds trust and transparency while helping your team understand how and where the AI adds value. It’s also a chance to collect performance data and feedback that will shape your guardrails and governance model later.

3. Define your guardrails

Safety starts with clarity. Write down your top three “never” rules — the actions your AI agent must not take under any circumstances. For example, “Never process a payment without approval,” or “Never share sensitive customer data.” Documenting these rules upfront sets clear boundaries and informs your policy engine, access controls, and human-in-the-loop checkpoints. Guardrails not only protect your business but also give your team confidence to experiment safely.

4. Set up observability

From the first day your agent goes live, make sure you can see everything it does. Observability tools create audit logs, track metrics, and flag anomalies so you can monitor performance and compliance in real time. This visibility lets you troubleshoot issues quickly, stay confident in your regulatory compliance, and continuously refine your AI’s behavior. Think of it as the dashboard that keeps innovation and safety in balance.

5. Pilot with a small group

Don’t launch an AI agent across your organization right away. Start with an internal pilot or a limited customer segment where feedback loops are fast and manageable. This lets your team validate workflows, adjust rules, and address unforeseen issues before you scale. A controlled pilot builds confidence within your organization and provides a proof point for broader adoption.

6. Measure and iterate

Treat your first deployment as a learning cycle, not a finish line. Track key metrics such as resolution time, accuracy, escalation rates, and user satisfaction. Are agents solving real problems? Are they operating safely and within their boundaries? Use this data to fine-tune performance, retrain models if necessary, and expand responsibly. Continuous improvement ensures your AI agent gets smarter — and your business gets stronger — with every iteration.

What's next?

Building enterprise AI agents is a journey, not a destination. The technology is moving fast, but the principles of good architecture, strong safety, and clear governance will always apply. By designing these elements in from the start, you can move forward with confidence, knowing you're building AI that isn’t just powerful, it’s also responsible and trustworthy.

FAQs: Building Trustworthy Enterprise AI Agents

What exactly is an enterprise AI agent? An enterprise AI agent is more than just a chatbot: it doesn’t just talk — it takes action. It can update customer accounts, process returns, or route support tickets, all while following company rules and keeping every action transparent and auditable. How is an AI agent different from a regular chatbot? A chatbot answers simple questions from a knowledge base. Agent Assist supports human agents by suggesting responses or retrieving relevant information. A true AI agent can safely take actions within your systems under defined guardrails and governance. Why does the architecture of AI agents matter so much? Architecture keeps AI both secure and adaptable. A strong setup separates the AI’s “brain” (reasoning), “hands” (tools), and “rulebook” (allowed actions), allowing updates or pauses without disrupting workflows. What are AI guardrails and why do I need them? AI guardrails are strict boundaries that define what an AI agent can and cannot do, such as never processing a payment without approval. Paired with human-in-the-loop controls, they ensure safe operations and protect data. Where’s the best place to start with an enterprise AI agent? Start small with a high-impact task like resetting passwords or routing tickets. Launch in Agent Assist mode, gather feedback, track key metrics, and scale responsibly to improve AI performance and safety over time.

Related Articles